LangGraph.js AI Agent Template is an open-source, production-ready Next.js framework that enables you to build AI agents with advanced capabilities. It uses LangGraph.js to construct stateful, multi-actor applications.

This project integrates the Model Context Protocol (MCP), which allows for dynamic tool management. You can add or remove tools through a web interface without changing the underlying code.

Features

✋ Human-in-the-loop tool approval system with granular control over agent actions before execution.

💾 Persistent conversation memory using LangGraph checkpointer with PostgreSQL backend for full history preservation.

⚡ Real-time streaming interface built on Server-Sent Events with optimistic UI updates through React Query.

🔌 Support for both stdio and HTTP MCP servers with automatic tool name prefixing to prevent conflicts.

🤖 Multi-model provider support including OpenAI GPT-4 and Google Gemini with configurable defaults.

🧵 Thread-based conversation organization that enables seamless resume across sessions.

🎨 Modern UI built with shadcn/ui components, Tailwind CSS, and Lucide icons.

🔄 Error recovery and graceful degradation for robust production deployments.

Use Cases

- Advanced Customer Support Agents. Develop agents that can query order information from a database or API, with a human support operator approving the action before sending the data to the user.

- Developer Assistant Tools. Create an agent that helps with coding tasks, such as reading files or running scripts, where the developer must approve each action to maintain security.

- Content Creation Workflows. Build a multi-step content generation agent that uses various tools for research, writing, and editing, allowing a user to review and approve the output at each stage.

- Data Analysis and Reporting. Construct an agent that connects to multiple data sources, performs analysis, and generates reports, with an analyst guiding the tool selection and parameter modification.

How to Use It

1. Clone the repository from GitHub and navigate into the project directory. Then, install the necessary packages using pnpm.

git clone https://github.com/IBJunior/fullstack-langgraph-nextjs-agent.git

cd fullstack-langgraph-nextjs-agent

pnpm install2. Create a local environment file by copying the example file.

cp .env.example .env.local3. Open the newly created .env.local file and add your database connection URL and your chosen AI model’s API key.

NEXT_PUBLIC_API_BASE_URL=http://localhost:3000/api/agent

POSTGRES_USER=user

POSTGRES_PASSWORD=password

POSTGRES_DB=mydb

DATABASE_URL=postgresql://user:password@localhost:5434/mydb?schema=public

GOOGLE_API_KEY=your_google_api_key_here

OPENAI_API_KEY=your_openai_api_key_here4. Use Docker Compose to start the PostgreSQL database service in the background.

docker compose up -d5. Apply the database schema and generate the Prisma client.

pnpm prisma:generate

pnpm prisma:migrate6. Start the Next.js development server. You can now access the AI agent by visiting http://localhost:3000 in your web browser.

pnpm devAdding MCP Servers

Access the settings panel by clicking the gear icon in the sidebar. Click “Add MCP Server” to configure new tool sources.

The template supports two server types: stdio for command-line tools and HTTP for web-based services.

For a filesystem server that allows the agent to read and write files:

{

"name": "filesystem",

"type": "stdio",

"command": "npx",

"args": ["@modelcontextprotocol/server-filesystem", "/Users/yourname/Documents"]

}The name field creates a unique identifier for the server. The command and args fields specify how to launch the stdio server. The template automatically prefixes tool names with the server name to prevent conflicts when multiple servers provide similar tools.

For HTTP-based tools that expose MCP endpoints:

{

"name": "web-api",

"type": "http",

"url": "http://localhost:8080/mcp",

"headers": {

"Authorization": "Bearer your-token"

}

}HTTP servers require a URL endpoint. Optional headers can include authentication tokens or other metadata needed by the service.

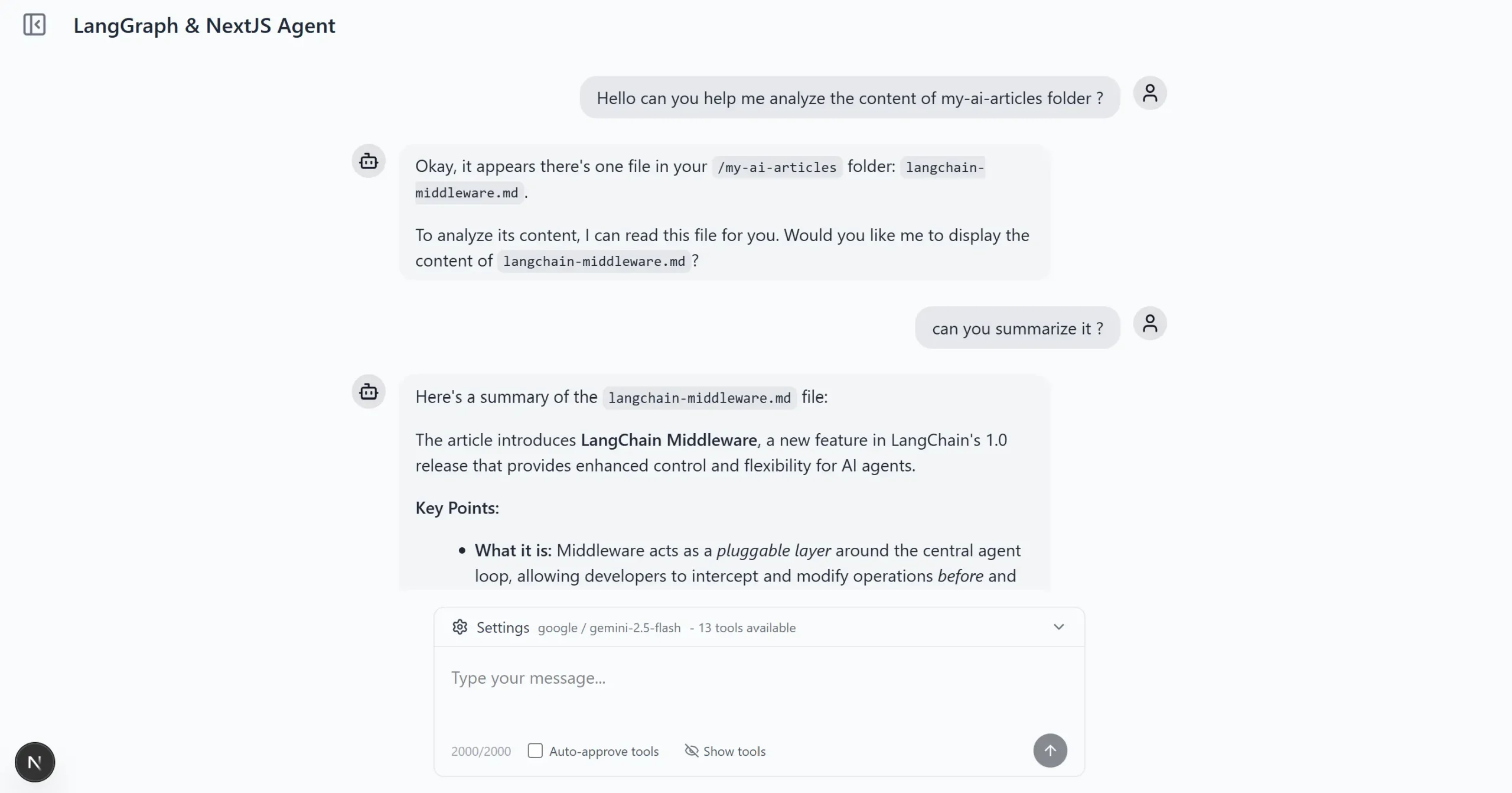

Configuring Tool Approval Workflow

The template implements a human-in-the-loop system where the agent requests permission before executing tools. When the agent wants to use a tool, the interface displays the tool name, parameters, and description. You can approve the action, deny it, or modify parameters before execution.

The agent builder in src/lib/agent/builder.ts defines the approval workflow using LangGraph’s interrupt mechanism. The StateGraph includes three main nodes: agent, tool_approval, and tools. After the agent node suggests a tool call, execution pauses at tool_approval until you provide input through the UI.

To enable automatic approval for trusted environments, modify the agent configuration to bypass the approval step. This is useful for development or when running agents in controlled environments where tool execution does not require oversight.

Working with Persistent Memory

The template uses LangGraph’s PostgreSQL checkpointer to maintain conversation state across sessions. Each conversation belongs to a thread, which stores all messages and agent states. When you create a new thread, the system assigns a unique identifier and initializes an empty checkpoint.

The checkpointer saves agent state after each step, including messages, tool calls, and approval decisions. When you return to a thread, the agent loads the complete history and continues from the last checkpoint. This enables long-running conversations that span multiple sessions.

The Thread model in prisma/schema.prisma defines the database structure:

model Thread {

id String @id @default(cuid())

title String?

createdAt DateTime @default(now())

updatedAt DateTime @updatedAt

messages Message[]

}Messages link to threads through a foreign key relationship. The checkpointer stores serialized state in a separate table that LangGraph manages automatically.

Streaming Responses

The template implements Server-Sent Events for real-time response streaming. The API route at src/app/api/agent/stream/route.ts handles incoming messages, invokes the agent, and streams chunks back to the client.

The streaming service processes different event types including messages, tool calls, and errors. The client-side hook in src/hooks/useChatThread.ts uses React Query to manage optimistic updates and error handling. When you send a message, the UI immediately displays it and shows a loading indicator while waiting for the agent response.

The agent streams tokens as they generate, providing immediate feedback. Tool calls appear in the stream with approval prompts. After you approve or deny a tool, the stream continues with updated content.

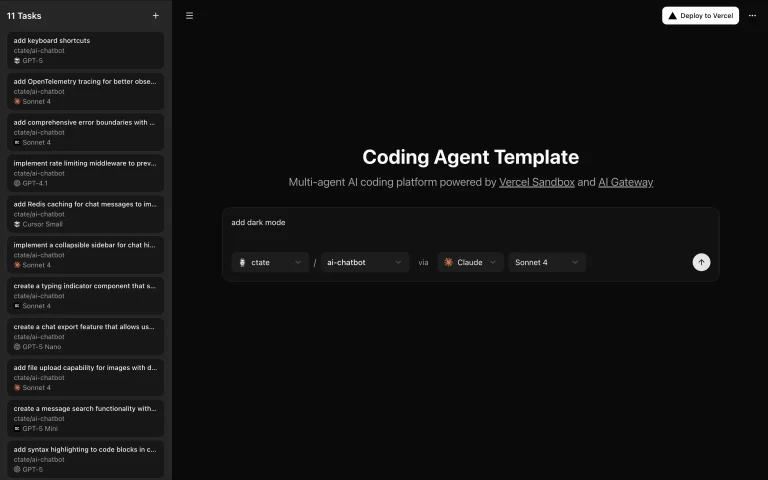

Customizing the Agent

The agent builder accepts configuration options for model selection, temperature, and system prompts. To modify the agent behavior, edit the builder configuration in src/lib/agent/builder.ts.

The system prompt defines the agent’s personality and capabilities. You can add instructions about how to format responses, which tools to prefer, or domain-specific knowledge. The template uses a default prompt that explains the agent’s capabilities and encourages helpful responses.

To add custom tools beyond MCP servers, extend the tools array in the builder. LangGraph.js supports any tool that implements the tool interface with name, description, and execution function. You can create tools that call internal APIs, perform calculations, or interact with databases.

Managing Multiple Models

The template supports switching between OpenAI and Google AI models. Configure available models in the settings panel. Each model has different capabilities, pricing, and performance characteristics.

GPT models provide strong reasoning and instruction following. Gemini models excel at long context understanding and multimodal inputs. You can set different models for different threads based on your needs.

The model binding happens in the agent builder where it creates a ChatOpenAI or ChatGoogleGenerativeAI instance based on configuration. The builder passes the model to LangGraph’s createReactAgent function, which handles message formatting and response parsing.

Production Deployment

For production deployment, build the application and configure environment variables on your hosting platform. The template works with Vercel, AWS, or any Node.js hosting environment that supports Next.js.

pnpm build

pnpm startSet up a managed PostgreSQL database instead of running Docker locally. Update the DATABASE_URL to point to your production database. Run migrations on the production database before starting the application.

Configure rate limiting and authentication for the API routes. The template does not include authentication by default, so you need to add middleware that validates user sessions before allowing agent interactions.

Monitor database checkpoint growth over time. The checkpointer stores full state at each step, which can consume storage for long conversations. Implement cleanup policies that archive or delete old threads based on your retention requirements.

Related Resources

- LangGraph.js Documentation: The official documentation for LangGraph.js, providing detailed information on building stateful, multi-actor applications.

- Model Context Protocol (MCP): The specification for MCP, an open protocol for integrating LLM applications with external tools and data sources.

- Next.js Documentation: The official guide for the Next.js framework, covering everything from basic features to advanced topics.

- Prisma Documentation: The official documentation for the Prisma ORM, including guides on schema modeling, migrations, and client usage.

FAQs

Q: Can I use this template with other AI model providers besides OpenAI and Google?

A: The template currently supports OpenAI and Google AI models through LangChain.js abstractions. You can add support for other providers by creating model instances that implement the BaseChatModel interface. LangChain.js provides integrations for Anthropic, Cohere, and other providers. You would need to install the appropriate package, configure API keys, and update the model selection logic in the agent builder.

Q: How do I prevent the database from growing too large with conversation history?

A: The checkpoint system stores complete agent state at each step, which accumulates over time. Implement a cleanup strategy by adding a scheduled job that deletes threads older than a certain age or archives them to cold storage. You can also limit the number of messages loaded from history by modifying the checkpointer configuration to only restore recent state. The Prisma schema includes timestamps that make it straightforward to query and delete old records.

Q: What happens if an MCP server becomes unavailable during a conversation?

A: The template includes error handling for tool execution failures. If an MCP server is unreachable, the agent receives an error message and can inform the user about the problem. The streaming service catches exceptions and sends error events to the client. You can improve resilience by implementing retry logic in the MCP client or providing fallback tools that offer similar functionality.

Q: How do I add authentication to restrict who can use the agent?

A: The template does not include built-in authentication. You need to add middleware that checks user sessions before allowing access to the chat interface and API routes. Next.js supports several authentication patterns including NextAuth.js, Clerk, and custom JWT validation. Add user identification to the thread model to associate conversations with specific users. Update the API routes to validate authentication tokens and filter threads by user.

Q: Can the agent use multiple tools in a single response?

A: Yes, the agent can chain multiple tool calls to accomplish complex tasks. LangGraph’s react agent pattern allows the model to iteratively select tools, observe results, and decide on next actions. Each tool call goes through the approval workflow if enabled. The agent continues until it determines the task is complete or reaches a maximum iteration limit. You can observe this behavior when asking the agent to perform tasks that require multiple steps.