The Future of Web Dev

The Future of Web Dev

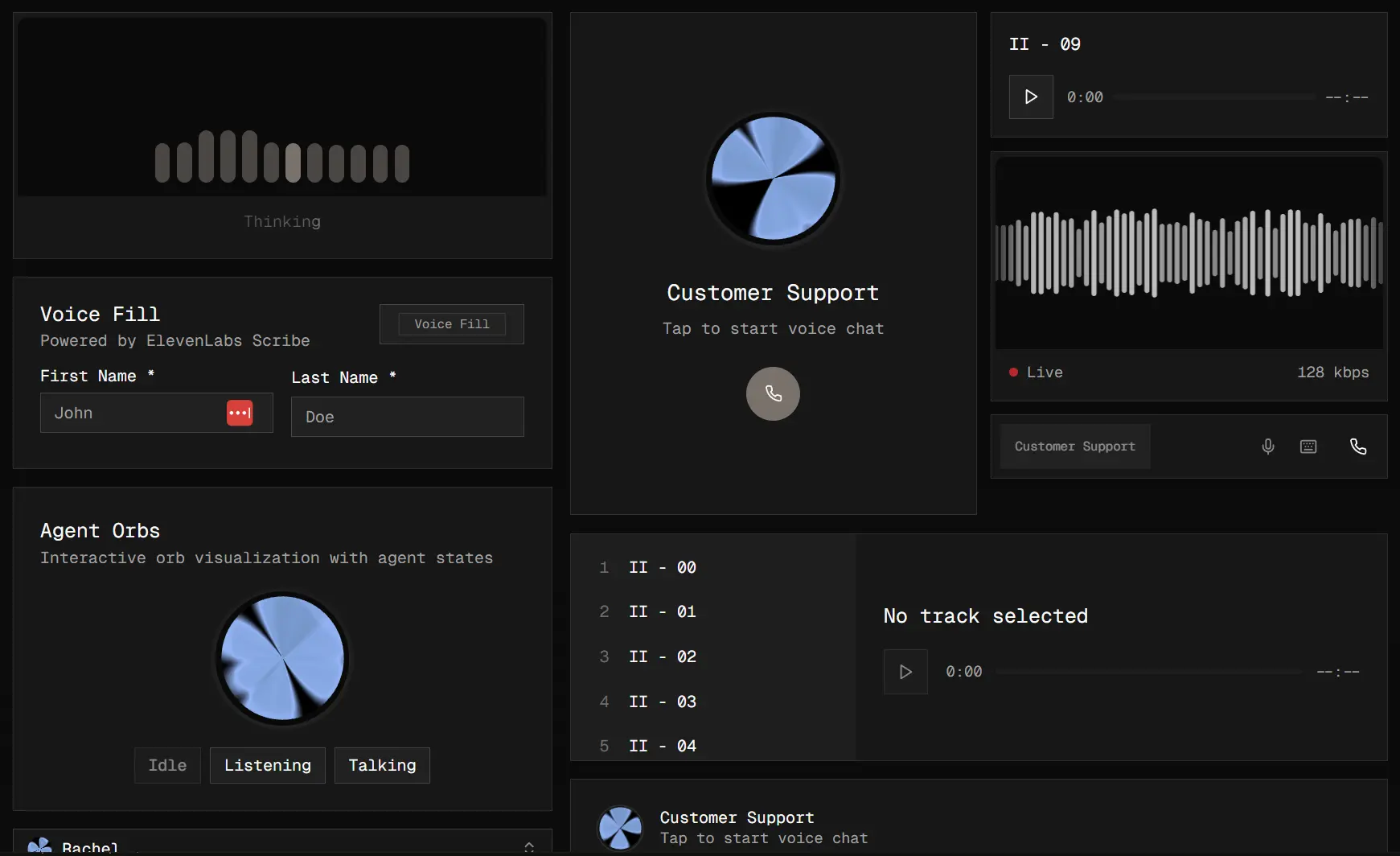

Build Multimodal AI Agent with shadcn/ui & ElevenLabs UI

Build AI-powered voice and chat UI with ElevenLabs UI. Get customizable, pre-built components for agents, audio, and transcription.

This is ElevenLabs’ official UI component library that provides a collection of open-source AI agent and audio components for creating multimodal agentic user experiences.

You can use the UI components to build modern apps for voice agents, real-time audio visualization, and interactive chat systems.

Features

🎙️ Voice conversation interface with microphone controls, text input, and real-time waveform visualization for building agent experiences.

🎨 3D animated orb visualization built with Three.js that reacts to audio input and displays agent states with custom colors.

📊 Multiple audio visualization options including bar visualizers, live waveforms, and canvas-based rendering with customizable modes.

💬 Complete message system with composable components, avatar support, and automatic styling for user and assistant messages.

⚡ Streaming markdown renderer that displays AI responses with smooth character-by-character animations using Streamdown.

🎵 Audio player component with progress controls and playback management for music, podcasts, and voice content.

🔍 Voice picker with searchable interface, audio preview, and direct integration with ElevenLabs voice library.

📝 Conversation container with auto-scroll behavior and sticky-to-bottom functionality for chat interfaces.

🎚️ Interactive voice recording button with live waveform visualization and automatic state transitions.

🛠️ Direct codebase integration that lets you edit and customize component source files instead of relying on locked library code.

Use Cases

- Voice-enabled customer support chatbots that combine text and audio responses with real-time transcription and playback controls.

- AI agent dashboards that display conversation history, audio visualizations, and voice interaction states for monitoring and debugging.

- Podcast applications with live transcription, waveform scrubbing, and synchronized text display for accessibility and navigation.

- Voice-based form interfaces that capture user input through speech with visual feedback and confirmation displays.

- Educational platforms that use voice agents for language learning with pronunciation visualization and interactive conversation practice.

How to Use It

1. You can add ElevenLabs UI components to your project using one of two command-line interface (CLI) tools. The first option is the dedicated Agents CLI, which is the most direct method.

pnpm dlx @elevenlabs/agents-cli@latest add orb

npx @elevenlabs/agents-cli@latest add orb

yarn @elevenlabs/agents-cli@latest add orb

bunx --bun @elevenlabs/agents-cli@latest add orb2. If your project already uses shadcn/ui, you can use its standard CLI to install components from the ElevenLabs registry.

pnpm dlx shadcn@latest add https://ui.elevenlabs.io/r/orb.json

npx shadcn@latest add https://ui.elevenlabs.io/r/orb.json

yarn shadcn@latest add https://ui.elevenlabs.io/r/orb.json

bunx --bun shadcn@latest add https://ui.elevenlabs.io/r/orb.json3. After installation, the component’s code is placed directly into your project, typically under the components/ui folder.

4. You can then import and use them in your application just like any other React component.

For example, you can create a 3D animated sphere that responds to audio input.

"use client"

import { Card } from "@/components/ui/card"

import { Orb } from "@/components/ui/orb"

export default function Page() {

return (

<Card className="flex items-center justify-center p-8">

<Orb />

</Card>

)

}5. All available UI components.

- Audio Player: A customizable player for audio content with progress controls and playback management.

- Bar Visualizer: A real-time audio frequency visualizer with state-based animations for voice agents and audio interfaces.

- Conversation: A scrolling container for chat interfaces that features auto-scroll and sticky-to-bottom behavior.

- Conversation Bar: A complete voice conversation interface that includes microphone controls, text input, and real-time waveform visualization.

- Live Waveform: A real-time, canvas-based audio waveform visualizer that uses microphone input and has customizable rendering modes.

- Message: A set of composable message components with options for an avatar, content variants, and automatic styling for user and assistant messages.

- Orb: A 3D animated orb built with Three.js that is audio-reactive and can visualize agent states with custom colors.

- Response: A streaming markdown renderer that displays AI responses with smooth, character-by-character animations.

- Shimmering Text: A component for creating text with an animated shimmering effect.

- Voice Button: An interactive button that manages voice recording states, displays a live waveform visualization, and provides automatic feedback transitions.

- Voice Picker: A searchable voice selector that includes an audio preview, orb visualization, and integration with ElevenLabs voices.

- Waveform: A collection of canvas-based components for audio waveform visualization that supports recording, playback scrubbing, and microphone input.

Related Resources

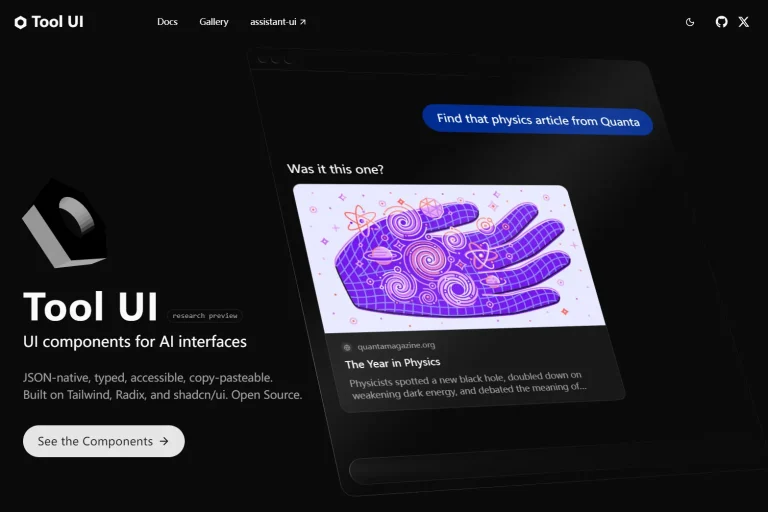

- shadcn/ui – The component infrastructure that ElevenLabs UI extends, providing accessible React components with Radix UI and Tailwind CSS.

- ElevenLabs API Documentation – Official documentation for integrating ElevenLabs voice AI services with your applications.

- Three.js Documentation – Reference for understanding and customizing the 3D orb visualization component built with Three.js.

- Streamdown – The markdown streaming library used by the response component for character-by-character animations.

FAQs

Q: Can I modify the component source code after installation?

A: Yes. ElevenLabs UI installs components as source files in your project directory. You can edit any component file to change functionality, styling, or behavior. The components live in your codebase rather than a locked npm package.

Q: Do I need an ElevenLabs API key to use these components?

A: The visual components work without an API key. Components that interact with ElevenLabs services, like the voice picker, require an API key for full functionality. You can use the UI components independently for custom audio implementations.

Q: Why are my components not styled correctly?

A: Ensure your project’s globals.css file correctly imports Tailwind CSS and includes the necessary base styles for shadcn/ui.